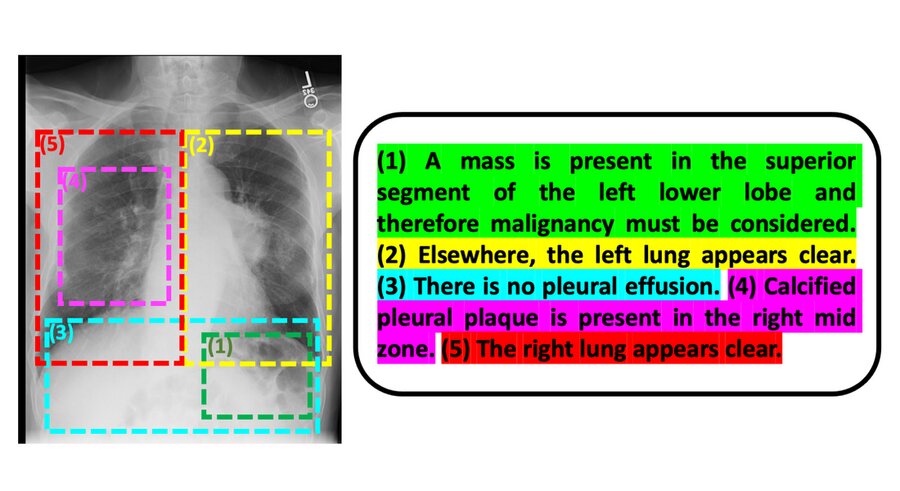

Researchers at MIT are exploring the use of artificial intelligence to automate the process of using X-rays and other images in combination with radiology reports to diagnose and treat patients. “We want to train machines that are capable of reproducing what radiologists do every day,” says Ruizhi Liao, a recent PhD graduate at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL). The vast body of radiology reports accompanying medical images are written by radiology professionals, and the researchers hope to see improvements in the interpretive abilities of machine learning algorithms. The researchers are also using “mutual information”, a statistical measure of the interdependence of two variables. The two variables they take into consideration when building their model are the text from the radiology reports and the medical images. In this case, “when the mutual information between images and text is high, that means that images are highly predictive of the text and the text is highly predictive of the images,” explains MIT professor Polina Golland. This model could have broad applicability aside from being clinically meaningful. “It could be used for any kind of imagery and associated text, inside or outside the medical realm,” says Golland. “This general approach, moreover, could be applied beyond images and text, which is exciting to think about.”