Canada outperformed 10 comparable OECD nations in the first two years of its pandemic response, concludes a study in the Canadian Medical Association Journal.

According to Razak et al.[1], the country’s lower numbers of detected cases, COVID-19 deaths, and excess mortalities from all causes were linked to the persistence of Canada’s social restrictions and that Canadians were better vaccinated than people in comparable “peer” nations.

The study ranked the responses from Belgium, Canada, France, Germany, Italy, Japan, the Netherlands, Sweden, Switzerland, the U.K., and the U.S.

While being top ranked in vaccine uptake and the stringency of social restrictions, Canada was the next best in disease outcomes, being the only country of the 11 to detect fewer than 100,000 cases per million people from March 2020 to February 2022. Canada saw 82,700 cases per million people, while the highest-ranking case counts occurred in the Netherlands with 313,000 per million and France with 312,000 per million. Japan was deemed an outlier with the lowest reported cases (27,600 per million) and deaths (156 per million) despite having the oldest population and imposing the mildest restrictions.

At first glance, the results of Razak et al.’s paper give the impression that Canada compares favourably to its “peers”. However, since that the authors have based their assertion solely on rankings, they have over-emphasised the link between Canada’s top-ranked pandemic responses and its low burden of disease outcomes.

The trouble with rankings

Ranking is one of the simplest systematic methods of performance appraisal in which individual countries are compared with others for the purpose of placing worth on the order they attain. However, if the rankings from Razak et al. are to carry any meaning, then there should be a clear association between interventions and outcomes when all 11 countries are analysed collectively.

In statistics, a rank correlation coefficient (denoted by the Greek letter tau) measures the degree of similarity (or agreement) between two rankings, and it can be used to assess the significance of the relation between them. An increasing correlation coefficient implies increasing agreement between rankings. Top-ranked countries for interventions should consistently have the lowest-ranked disease outcomes.

The coefficient is inside the interval [−1, 1] and has the value:

-

- 1, if the agreement between the two rankings is perfect; the rankings of the two variables are the same.

- 0, if the rankings are completely independent of each other.

- −1, if the disagreement between the two rankings is perfect; the ranking of one variable is the reverse of the other.

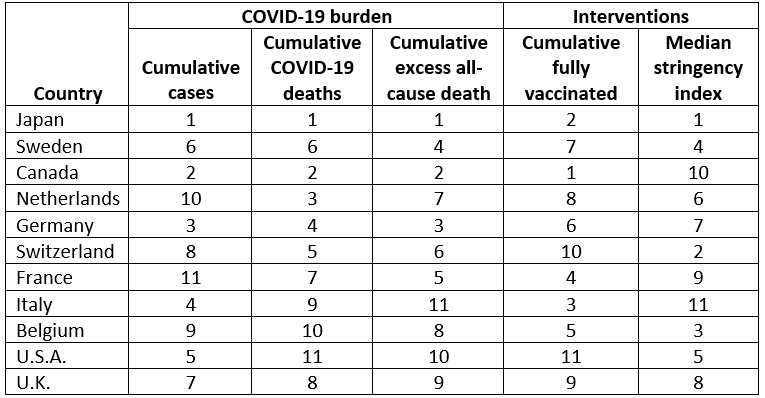

The table below provides a summary of the ranked results discussed in Razak et al.

Rankings of COVID-19 related burden, percentage of the population vaccinated, and stringency index (modified from Supplemental Table 3 in the Appendix of Razak et al.) Rankings are ordered with “1” being the best out of all 11 countries, which is not necessarily the lowest (e.g., Canada is ranked first in cumulative fully vaccinated with the highest fraction of the population receiving at least 2 doses of a COVID-19 vaccine).

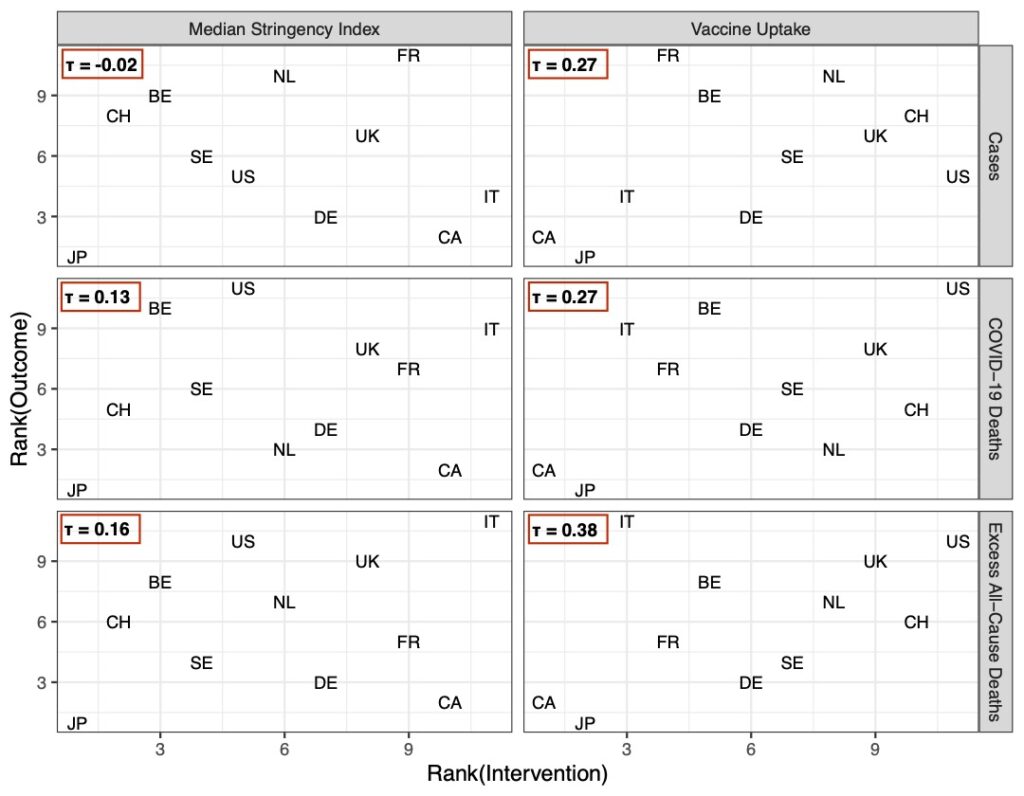

To analyse these data beyond stating how countries stand amongst each other, I calculated the correlation coefficient[2]between the ranking of an intervention (i.e., % fully vaccinated or the median stringency index) and the respective rankings of the cumulative cases, COVID-19 deaths, and excess all-cause mortalities. This exercise generates three separate correlation coefficients per intervention.

Across all 11 countries, the median stringency index values, on one hand, had nearly independent ranks (i.e., almost no correlation) to cumulative case counts (τ = -0.02), cumulative confirmed COVID-19 deaths (τ = 0.13), and cumulative excess mortality from all causes (τ = 0.16).

The rankings for uptake of two vaccine doses, on the other hand, possessed some similarity to a country’s rank of cumulative case counts (τ = 0.27), confirmed COVID-19 deaths (τ = 0.27), and cumulative excess mortalities from all causes (τ = 0.38).

While these latter ranks are not completely independent, they are, at best, only moderately better than what you would expect from a dice-rolling contest (see figure below), not because one country limited the impact of the pandemic better than its “peers”.

Razak et al., as well as spokespeople for the Canadian government,[3]have rewarded Canada’s ranking despite it possessing little relevance.

The scatter plots display the ranked pairs of pandemic responses (columns) and one of three epidemiological outcomes from the 11 countries in the table above (rows). The greater the similarity between a country’s ranks for pandemic responses and its outcomes, the closer together the points will be. The more independent they are, the more spread out, and random looking, the points will be.

‘Alternate reality’ scenarios

Considering Canada’s ranking amongst its “peers”, Razak et al. use its standing as the basis of alternate reality scenarios (or counterfactual reasoning) of how much worse Canada’s COVID-19 outcomes “could have been” with France-like infection rates or U.S.-like vaccine coverage.

An important function of counterfactual reasoning is to serve as a reference point when judging an outcome.[4]Counterfactuals, such as the ones presented by Razak et al., are all too common when favourable outcomes are observed, and serve to provide behavioural prescriptions in future pandemics (i.e., “we proved our interventions saved 70,000 lives last time, so we will do the same again”).

However, as soon as counterfactual events are inserted by removing actual ones, we are telling an imagined story, not one that happened. When Razak et al. adjudicate Canada’s experience with SARS-CoV-2 under French- or American-like scenarios, they have not reflected upon how fundamentally different Canada, as a country, would need to be.

For example, transmission rates depend on host density: the closer people are to one another, the more likely they can spread infection.[5]For the inhabited areas of Canada to have France’s transmission rate would require a five-fold increase in population density, which implies: one, Canada’s total land area shrinks to 328,669 square kilometres (which is smaller than Newfoundland and Labrador); or two, its population grows to 202 million people (making it the 8th largest country in the world, by population – Canada currently ranks 40th).[6]

In other words, because both represent impossible changes to its inhabited land area or population size, Canada could never have France’s transmission rate.

It is also unlikely that if Canada’s vaccine coverage was U.S.-like, it would have resulted in 68,800 additional deaths. The main reason for this is that vaccine coverage quantifies “how many” people have been vaccinated, not “whom”.

The authors’ scenario hinges on every additional unvaccinated person (approximately 5.9 million people, according to Razak et al.) getting infected, and where 1.1% – the approximate U.S. case fatality rate for COVID-19 – will die.

Even if this scenario were to be accepted, there is no mention that if few of the 5.9 million unvaccinated people were elderly, or chronically ill, then there’d be far fewer than 68,800 – if any – additional deaths related to COVID-19.

The Razak et al. study is yet another case of data being presented from a narrow point of view, and in a manner that amounts to nothing more than an endorsement of government policy. It is certainly no reason for Canadian politicians and public health officials to be patting themselves on the back.

References & Notes

[1] Canada’s response to the initial 2 years of the COVID-19 pandemic: a comparison with peer countries, Razak F, et al. CMAJ 2022; 194: E870-7

[2] For the interested reader: I used the R packages Kendall: Kendall Rank Correlation and Mann-Kendall Trend Test and NSM3: Functions and Datasets to Accompany Hollander, Wolfe, and Chicken – Nonparametric Statistical Methods, Third Edition to calculate Kendall’s rank correlation coefficient, and its bootstrapped 95% confidence intervals (which are not reported), respectively.

[3] Rebel News, September 15, 2022: https://twitter.com/i/status/1570492440993300481

[4] Pitfalls of Counterfactual Thinking in Medical Practice: Preventing Errors by using more Functional Reference Points; Petrocelli JV. J Pub Health Res 2013

[5] Regulation and Stability of Host-Parasite Population Interactions: I. Regulatory Processes; Anderson RM, May RM. J Animal Ecol 1978; 47: 219-47

[6] World Population Review; last accessed: 3rd October 2022

(David Vickers – BIG Media Ltd., 2022)

So true – sadly…

In other words the study by Razak et al., 2022 amounts to government propaganda. I would’ve thought that the “peer review” process would’ve pick that up. NO??

It all depends on who reviewed the article. Many journals, including the CMAJ, ask researchers to suggest 2-3 preferred reviewers, as well reviewers to avoid (i.e., those who might have personal vendettas, professional jealousies, or methodological disagreements) once the manuscript goes out for peer review. The Journal doesn’t have to abide by the author’s recommendations, but it almost certainly guarantees at-least one positive review.

There’s a quote from Richard Horton (the Editor in Chief of The Lancet) that, in my experience, captures the peer review process quite well [from the Medical Journal of Australia 2000; 172: 148–149]:

“The mistake, of course, is to think that peer review is any more than a crude means of discovering the acceptability — not the validity — of a new finding. Editors and scientists alike insist on the pivotal importance of peer review. We portray peer review to the public as a quasi-sacred process that helps make science our most objective truth teller. But [for those of us who have experienced it], we know that peer review is biased, unjust, unaccountable, incomplete, easily fixed, often insulting, usually ignorant, occasionally foolish, and frequently wrong.”