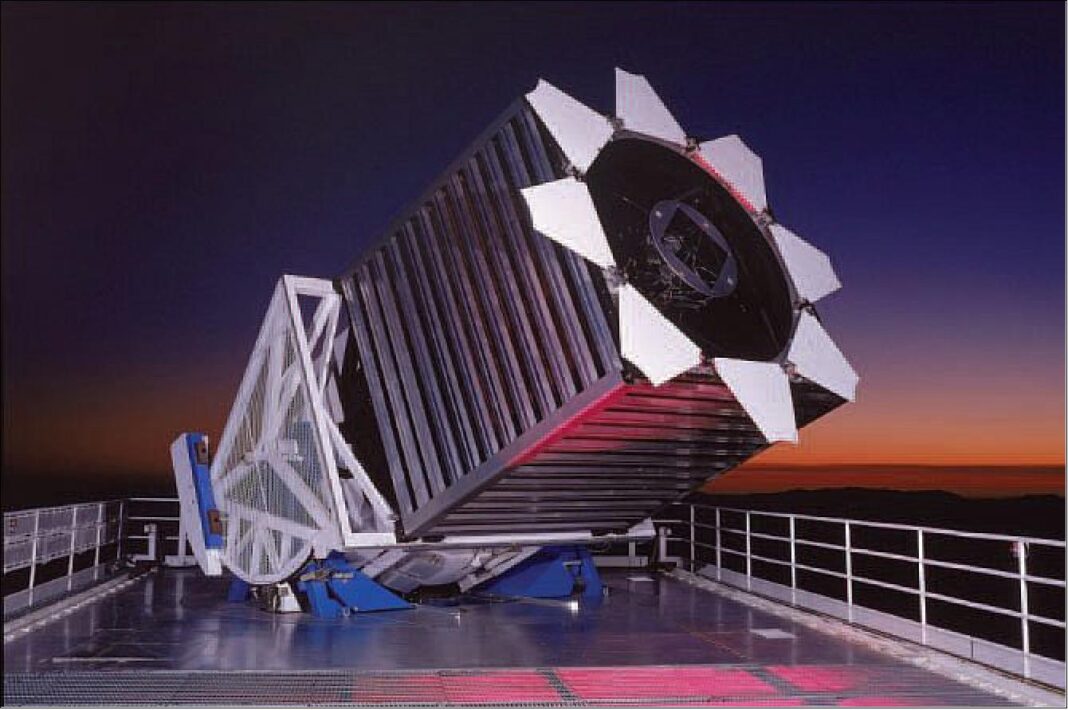

To address limitations associated with the astronomical amount of data required to explore and catalogue the universe, a team of researchers from Lawrence Berkeley National Laboratory (Berkeley Lab) is investigating self-supervised representation learning, reports Tech Xplore. Like unsupervised learning, self-supervised learning eliminates the need for training labels, instead attempting to learn by comparison. By introducing certain data augmentations, self-supervised algorithms can be used to build “representations” – low-dimensional versions of images that preserve their inherent information – and have recently been demonstrated to outperform supervised learning on industry-standard image datasets. “We are quite excited about this work,” said George Stein, a post-doctoral researcher at Berkeley Lab and a first author on the new paper. “We believe it is the first to apply state-of-the-art developments in self-supervised learning to large scientific datasets, to great results.” For this proof-of-concept phase of the project, the team applied existing data from ~1.2 million galaxy images generated by the Sloan Digital Sky Survey. The goal was to enable the computer model to learn image representations for galaxy morphology classification and photometric redshift estimation, two “downstream” tasks common in sky surveys. In both cases, they found that the self-supervised approach outperformed supervised state-of-the-art results. The research team is now gearing up to apply their approach to a much larger, more complex dataset – the Dark Energy Camera Legacy Survey – and extend the scope of applications and tasks.

https://techxplore.com/news/2021-07-self-supervised-machine-depth-breadth-sky.html