Unless you were born before 1875, you have most likely been affected by the digitization of music at some point in your lifetime. From iPhones to bluetooth speakers, to car stereos, the ability to convert an analog wave into a series of 1s and 0s is a process that almost resembles magic. How does this magic work? How is it possible to harness vibrations in the air and convert them into electrical signals? To understand that, we need to travel back in time to the dazzling 1870s.

Many of you are familiar with our good old-fashioned friend, the record player. But not so many people are aware of its great grandfather, the phonograph. The phonograph is the brainchild of the great inventor Thomas Edison. It is the first instance of recorded audio. The way it works is, in fact, quite similar to modern digital audio systems.

Consider the diagram of a phonograph below. The mechanism consists of a wooden cylinder wrapped in metal foil, two horns each with a thin membrane covering the base, and a needle attached to each membrane. As you speak into the first horn, you manually crank a lever that rotates the wooden cylinder. The vibrations from your voice are amplified as they travel through the horn. This causes the membrane to vibrate and the needle to vibrate along with it, which etches the wave onto the metal foil. Voila! Your sound is now recorded.

To play the recording back is the same process but in reverse. By rotating the cylinder, the second needle follows the groove made in the foil, vibrating the membrane, which is amplified by the second horn. Of course, this was a very rudimentary implementation. The sound quality of these recordings was abysmal, but pretty impressive for something you could create with a few simple materials and a little extra time on your hands.

Figure 1: Diagram of a phonograph, Explain That Stuff!

Jump forward about a quarter of a millennium, and we find this same system implemented in many different shapes and forms. At a typical recording studio, you will see three main components: the microphone, the mixing board/computer, and the monitors (speakers). These three components act as an obstacle course for sound waves to navigate. Let’s walk through this process from the beginning.

Say I am standing in the live room of a recording studio, and I say the words “Hi, I’m Lindsey!” into a microphone. The two types of microphones that are commonly found in recording studios are dynamic and condenser. For simplicity’s sake, let’s assume I am speaking into a dynamic microphone (condenser mics are more sensitive and thus more complicated in their construction). The sound wave travels through the top of the microphone and reaches the diaphragm. Remember the thin membranes that covered the base of the two horns in the phonograph? A diaphragm is exactly that, but these days they are made of an extremely thin, sensitive strip of metal (some are less than 5 micrometres). Here is the first obstacle to the sound waves. Dynamic microphones are built to handle louder, more transient noises, so their diaphragms must be sturdy enough to withstand the amplitude of these sound waves. But increasing the thickness of the diaphragm means that some higher frequencies are not strong enough to vibrate the diaphragm and will be attenuated. This is always a tradeoff, but, fortunately, the quality of the sound is not affected too much, apart from a slight loss in “crispness” to the discerning ear.

The diaphragm is attached to a metal coil that is wrapped around a magnet (see Figure 2). As the vibrations from the diaphragm move the coil, an alternating electrical current is created. This process operates under the electromagnetic principle of induction, which essentially states that the movement of a coil of conductive material around a magnetic field induces an alternating electrical current in the coil, a wave containing positive, negative, and zero values. This has the same mathematical structure as a simple sine wave. Of course, with our audio source being a human voice with complex harmonics, the actual wave is much more complicated than a pure sine, but the same principles apply. The current then makes its way to the computer by way of a balanced XLR cable. A balanced XLR is a cable with three wires: two to carry the signal, and one ground wire. (There is often an audio interface that handles the signal before passing it to the computer, but that is a topic for another day).

Figure 2: Cross section of dynamic microphone, Media College.com

We have now successfully created the electronic alter-ego of our analog wave in the form of an alternating current! But something still does not add up. Computers don’t speak the language of AC currents; they speak binary. In order to process our audio signal, we need to convert it to something that the computer can understand. Here is where we enter the wonderful world of sampling.

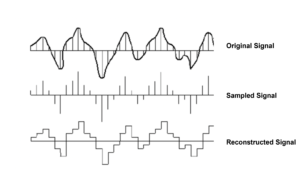

To understand how sampling works, imagine your favourite animated film. As you may know, stop-motion film production works by taking thousands of individual snapshots (or, in the days of cartoon artists, drawings) of a scene, and making tiny alterations between takes to create the illusion of movement. Sampling is just that, thousands of digital snapshots of a sound wave to create the illusion of a continuous wave (Figure 3).

Figure 3: Example of sampling an analog signal

Each sample holds a finite value between 1 and -1, which is the amplitude of the wave at a moment in time. The amplitude values are stored in a binary format. The number of potential values (between -1 and 1) that a sample can take is determined by what’s called the audio bit depth. Bit depth is another important variable in digital music, as it determines the resolution of the signal we hear. The most common bit depths in studios are 16-bit and 24-bit. A binary number with more bits can take on more values, which allows us to pinpoint the amplitude of each sample with more accuracy. This is a similar concept to pixels on a computer screen. It is difficult to appreciate a picture of a mountain landscape on a computer with only 8×8 pixel resolution. The more pixels, the more realistic the picture. The same goes for digital audio.

At this point, we now have an accurate picture of our analog sound wave neatly packaged into a series of binary values. Now that it’s been converted into a language that the computer understands, there is no limit to what we can achieve. We could duplicate the signal and send one to the left speaker and one to the right, regulate the volume so that certain parts are louder than others, sprinkle in some reverb for ambience – whatever your heart desires! These days, if you can imagine it, someone has created a computer program to do it.

Equalizers (EQ) allow us to pinpoint miniscule frequencies and cut them out or boost them to our preferences. This is especially helpful for recording instruments such as acoustic bass. Think of the deep, rich tone of an upright bass. Equal partners to the tone of this instrument are the string noises; the “thunk” as each string is plucked, the slide of fingers over the fretboard. These details are instrumental (pun intended) in creating a well-rounded sound and enriching the listening experience. The song Honeysuckle Rose, sung by Jan Monheit with Fats Waller on the bass, is an example of an upright bass recorded with such care and attention to these tiny details that it sounds like you are physically sitting inside the instrument.

Like anything else digital, the world of music production is evolving at an extremely high rate. We now have the capability to influence the perceived location of the music. This is called 8D audio, and I highly recommend searching for examples of this on YouTube. It’s so convincing that if you close your eyes, it sounds like the musician is sitting in a chair performing live right behind you (for this effect, it’s imperative that you use headphones). To those of you who have considered dipping a toe into music production, don’t let this intimidate you! The accessibility and usability of music production tools is increasing just as rapidly. There are several digital audio workstations (DAWs) that can be downloaded for free with enough options and effects to keep you busy for days. There is an entire musical world to be explored at our fingertips. I hope this article gave you enough information to appreciate the truly elegant way in which this world was created.

References:

What Is A Microphone Diaphragm? (An In-Depth Guide)

Record players and phonographs

What Is Faraday’s Law of Induction?

(Lindsey Bellman – BIG Media Ltd., 2022)

Great article Lindsey! Your last sentence sums this up. This world was truly created elegantly. It is fascinating to track the development of sound recording from its primitive analog roots to the mind-blowing digital sound we enjoy today!